A security report on GenAI from Pillar, The State of Attacks on Generative AI, sheds light on some critical security challenges and trends emerging in generative AI (GenAI) applications. Here are the key insights I took away from the report.

1. High Success Rate of Jailbreaks

One of the most alarming statistics is that 20% of jailbreak attempts on generative AI systems are successful. This high success rate indicates a significant vulnerability that needs immediate attention. What’s even more concerning is that these attacks require minimal interaction—just a handful of attempts are enough for adversaries to execute a successful attack.

2. Top Three Jailbreak Techniques

The report identifies three primary techniques that attackers are using to bypass the security of large language models (LLMs):

- Ignore Previous Instructions: Attackers instruct the AI to disregard its system instructions and safety guardrails.

- Strong Arm Attacks involve using authoritative language or commands, such as “admin override,” to trick the system into bypassing its safety flags.

- Base64 Encoding: Attackers use machine-readable, encoded language to evade detection, making it difficult for the system to recognize the attack.

3. Vulnerabilities Across All Interactions

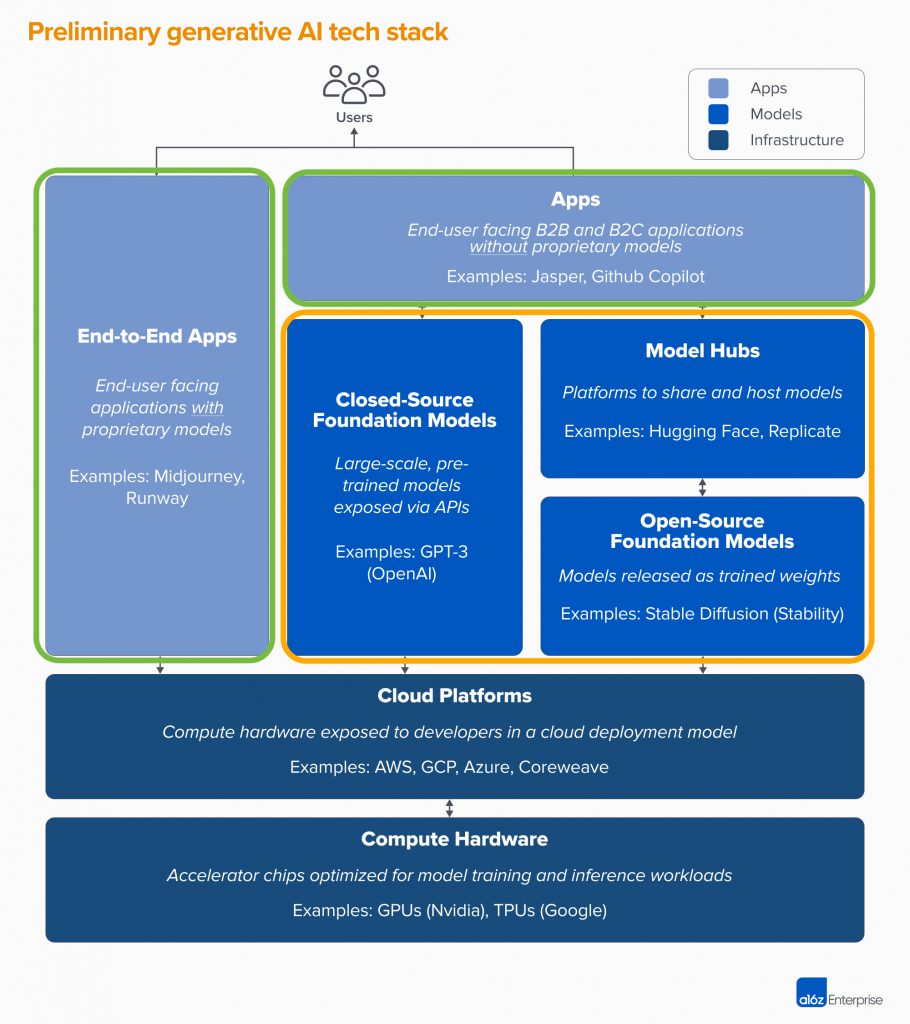

Attacks are happening at every layer of the generative AI pipeline, from the user prompts to the AI model’s responses and tool outputs.This highlights the need for comprehensive security that covers all stages of AI interaction, as traditional hardening methods have their limits due to the non-deterministic nature of LLM inputs and outputs.

4. The Need for Layered Security

The report emphasizes that security solutions need to be layered between interactions with the AI model. A great example of this approach is Amazon Bedrock Guardrails:

- A Bedrock guardrail screens it for inappropriate content before the user’s prompt reaches the AI model.

- Once the AI generates a response, it passes through another layer of security before being delivered back to the user.

- This approach ensures that potential risks are mitigated both before and after interacting with the AI.

5. Disparities Between Open-Source and Commercial Models

There is a clear gap in the resilience to attacks between open-source and commercial LLMs.

- Commercial models generally have more built-in protections because they offer complete generative AI applications, including memory, new features, authentication tools, and more.

- In contrast, open-source models (such as Meta’s Llama models) require the host to manage the orchestration and security of the LLM, placing more responsibility on the user.

6. There will be a Shared Responsibility for GenAI Security

I believe GenAI LLMs, app builders, and app users will all take place in the securing of GenAI. Organizations will not be able to outsource securing GenAI and will not be able to indemnify away the risks of GenAI applications in their businesses. Even with commercial models, leaders need to monitor every level of the stack. Security must be continuously maintained and monitored, especially as more generative AI applications are deployed in the future.

7. Insights and Practical Examples

Pillar’s report provides six real-world examples of jailbreaks, giving readers a tangible understanding of the techniques used and their implications. The report is a valuable resource for anyone involved in AI security, offering a snapshot of the current state and actionable insights on how to prepare for emerging threats in 2025 and beyond.

Final Thoughts

Pillar’s report on The State of Attacks on Generative AI is a great read for anyone interested in securing GenAI in their business or is evaluating adopting GenAI applications. Pillar has relevant GenAI telemetry data, practical examples, and delivers helpful insights and a forward-looking perspective.

If you’re working with generative AI or planning to, I highly recommend downloading the report—it’s free and full of actionable insights to help you stay secure.