Navigating the cybersecurity landscape is a complex challenge for many business stakeholders. As someone with years of experience in technology and a focus on cybersecurity over the last three, I see three primary reasons that make this field so confusing and challenging for business decision-makers to grasp. Here’s my take on why cybersecurity is a unique challenge—and why it doesn’t have to be quite so complicated.

1. Constant Threats from Bad Actors

One of the biggest challenges in cybersecurity is the persistent threat of bad actors—whether they’re financially motivated criminals or nation-state hackers. These attackers are always trying to infiltrate or exploit vulnerabilities for their gain. This can manifest as ransomware, where hackers hold your customer data hostage, or as state-sponsored attacks aiming to steal intellectual property or gain a technological advantage.

Because these threats are always evolving, organizations are in a constant state of vigilance. You never know when the next “zero-day” vulnerability will appear, a flaw that no one knew about until it was exploited. This creates a treadmill effect where businesses are always racing to patch the latest threat or fix an unexpected vulnerability. It’s like trying to maintain perfect health—an ongoing effort with no finish line in sight.

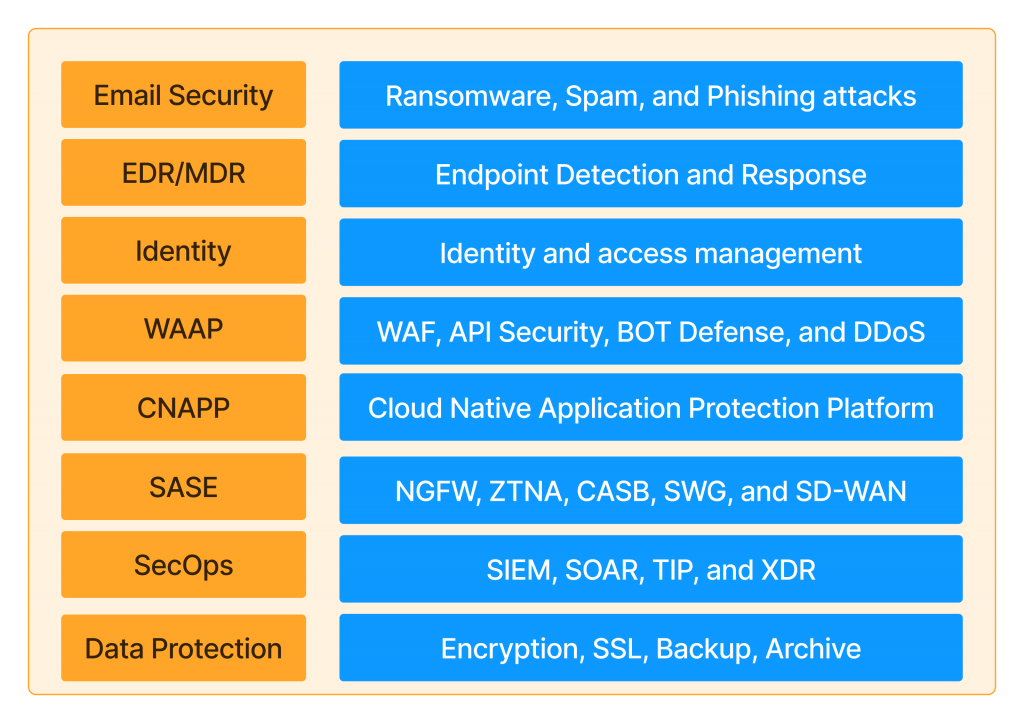

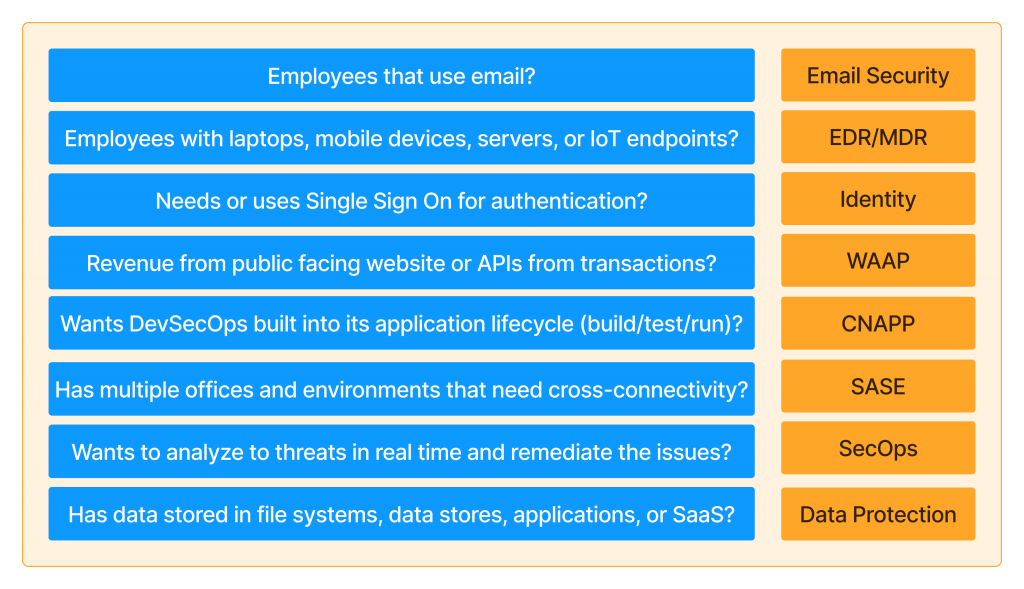

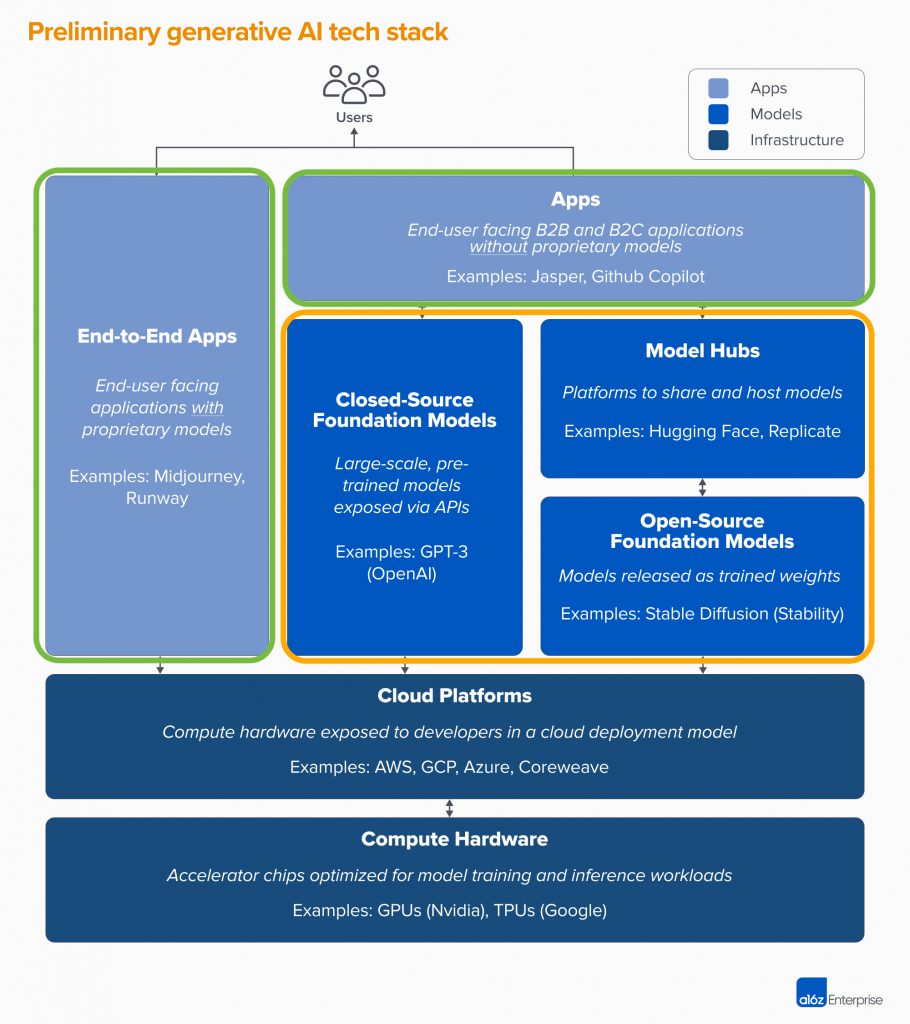

2. Innovation and Fragmentation in the Cybersecurity Market

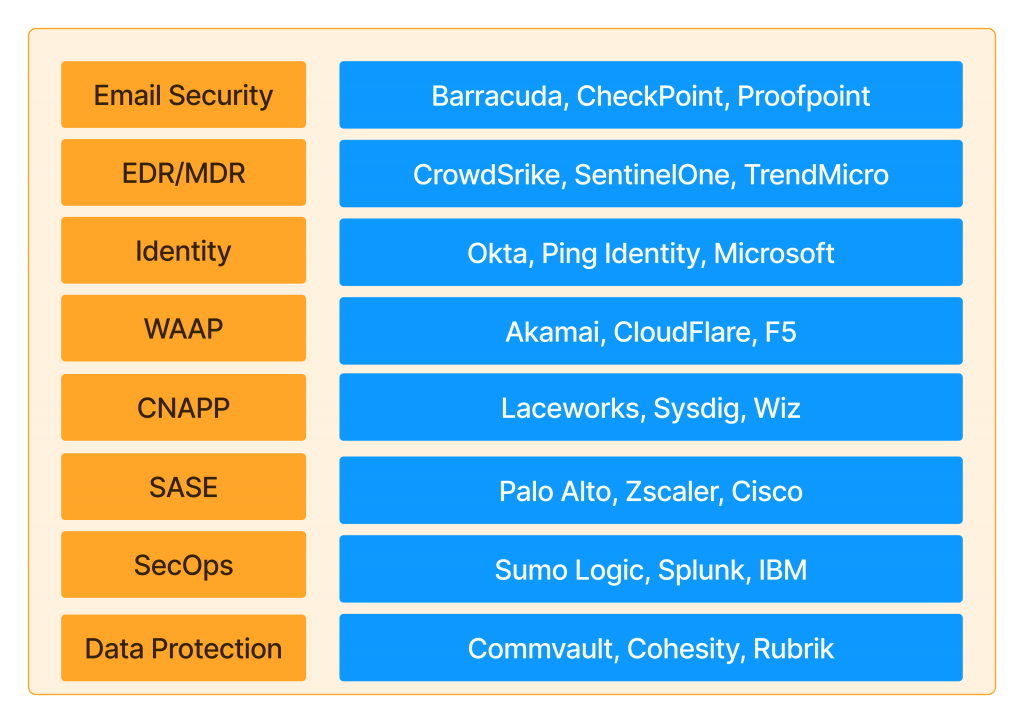

The rapid evolution of threats has led to a continuous wave of new security technologies and startups, each promising a better solution than the last. Every year, new products and services enter the market, claiming to be the ultimate solution. But just like maintaining a car or house, staying secure requires ongoing effort and maintenance. Cybersecurity isn’t something you can address once and forget about; it demands constant attention and investment.

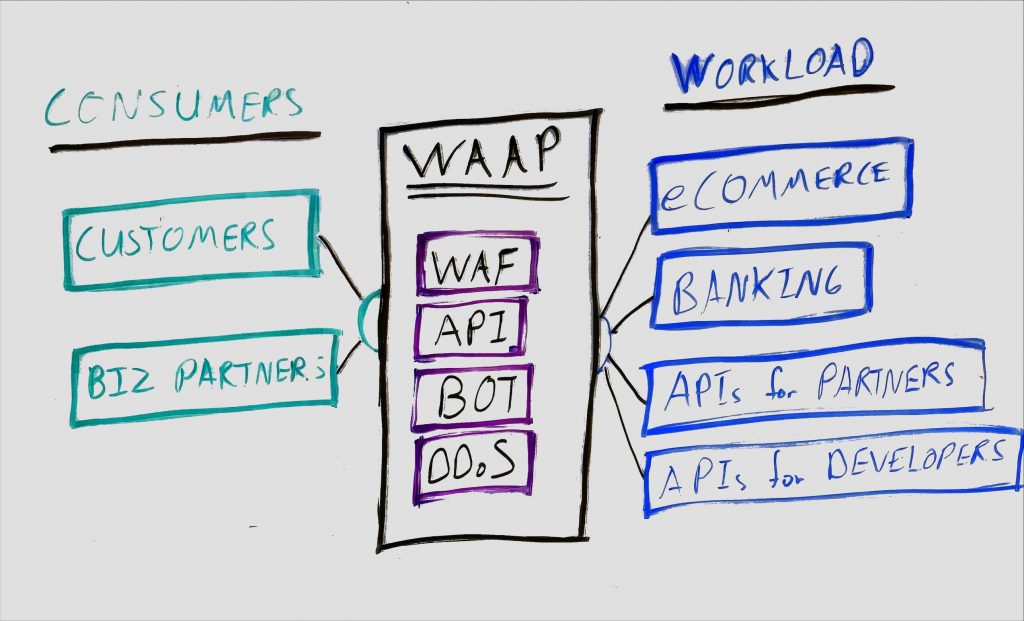

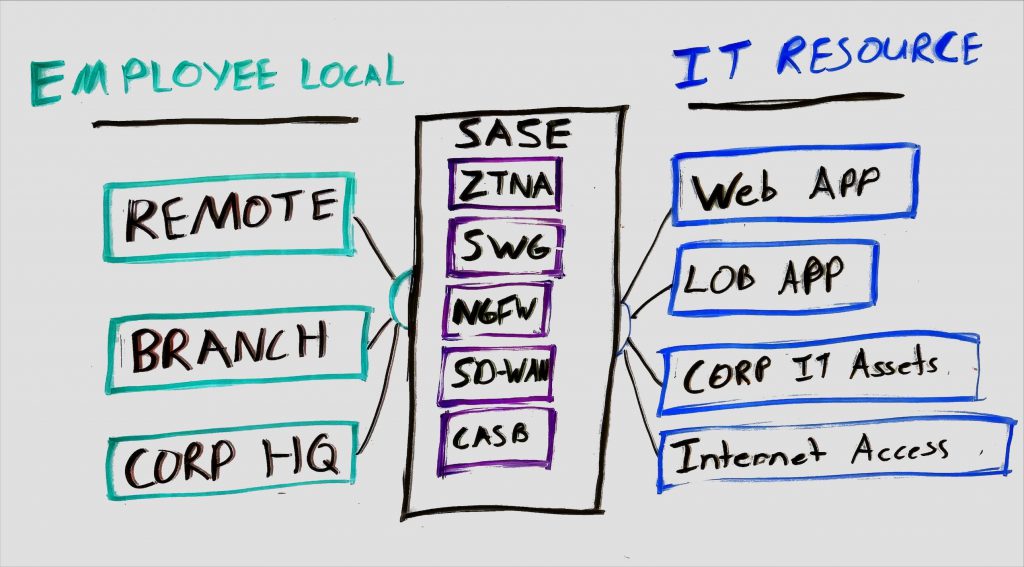

The market’s constant innovation can be overwhelming, especially for business leaders who want a “one-stop solution.” Big players like Palo Alto Networks, CrowdStrike, and others have started combining multiple security features into single platforms, promising a simpler, all-in-one solution. This can reduce the risk of security gaps between different tools but also requires stakeholders to trust one provider for everything.

3. The Human Factor

Even with the best technology in place, humans remain a weak link in cybersecurity. Studies show that a significant number of security breaches—upwards of 60%—stem from human error or manipulation, like social engineering and phishing attacks. These threats often come via email, with employees inadvertently clicking on malicious links or responding to fake invoices.

New technologies, such as AI-driven phishing and deepfake voice scams, make it even harder for people to distinguish between legitimate and malicious interactions. No matter how sophisticated a company’s defenses are, a single lapse in judgment by an employee can undermine everything. This human element is why cybersecurity remains a challenging field; as long as people make mistakes, there will always be vulnerabilities.

The Bottom Line

Cybersecurity is confusing because of three main issues: ever-evolving threats, a fragmented market of new solutions, and the inherent unpredictability of human behavior. For business stakeholders, these complexities make it challenging to feel truly secure, as cybersecurity requires both a technological and human-centric approach. Nonetheless, while it’s a complex field, understanding these factors can help decision-makers navigate it more confidently.

Stay tuned for more insights on simplifying and strengthening your cybersecurity approach.