This article aims to give business stakeholders an understanding of the major components of GenAI so they can effectively navigate the GenAI noise and have productive conversations internally and with trusted partners.

The recent advancements in generative AI are driving a race to capitalize and monetize GenAI by businesses. While there is no lack of content on GenAI, I’ve found that much of the content is focused on consumer productivity hacks, deeply technical research papers on Avitx, or code frameworks and GitHub repositories. My focus is on how business stakeholders should approach embedding GenAI in their companies and products though the lens of revenue growth, costs, risks, and sustainable competitive differentiators.

Section One: Generative AI and Foundation Models

Generative AI is based on what the industry refers to as foundation models – large-scale machine learning models trained on massive datasets, typically text or images. These models learn patterns, structures, and nuances from the data they’re trained on, enabling them to generate content, answer questions, translate languages, and more. Some of the most popular Generative AI use cases now include:

- Large language Models (LLMs) such as ChatGPT

- Image Generators(Text-to-image) such as Midjourney or Stable Diffusion

- Code generation tools (Uses LLMs fine-tuned on code) such as Amazon Code Wispherer or GitHub copilot

- Audio generation tools such as VALL-E

Section Two: Deployment and Consumption of Generative AI

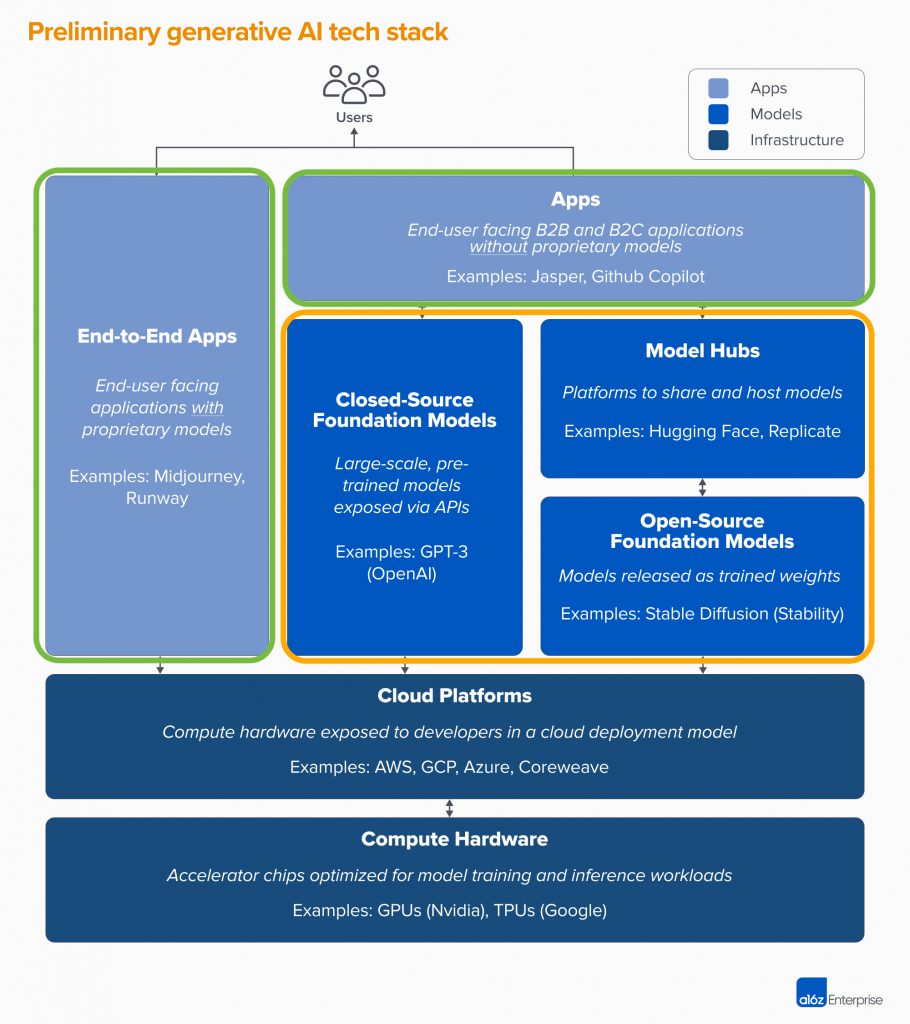

Deployment and consumption of Gen AI varies greatly. I’ve highlighted the primary areas of today’s GenAI landscape that business stakeholders should focus on figuring out for their company. I’ve highlighted the text and corresponding parts of the tech stack diagram below in green or orange. For most business stakeholders, you should focus on which of the three models benefits you the most.

- Use (consume) an off-the-shelf software solution that uses Gen AI to reduce costs. Not many B2B firms have launched GenAI features outside of SFDC’s Einstein GPT.

- Consume an existing GenAI-aaS such as ChatGPT and embed (deploy) the APIs functionality in your company’s products, services, or internal applications to drive revenue or lower costs.

- Fine-tune an existing open-source foundation model with proprietary data, and deploy it on a cloud or internal infrastructure. Embed the model outputs in your products, services, or internal applications as a competitive differentiator.

Section Three: Pre-trained vs. From Scratch Models vs. Fine-tuned

The decision between using a pre-trained service such as ChatGPT, fine-tuning an open large language model (LLM) with your data, or training and deploying your LLM from scratch hinges on several factors – time, cost, skillset, and specificity of the task.

Pre-trained services offer a cost-effective and timely solution, requiring minimal expertise and effort to integrate into your existing processes. However, they might not always provide the level of customization needed for niche applications.

Training and deploying your own LLM from scratch gives the highest degree of customization. Still, it requires significant resources – a dedicated team of AI experts, lots of data, substantial computational resources, and considerable time investment.

Fine-tuning an open-source LLM from providers such as Hugging Face and Meta AI offers a middle ground. You get the benefits of a pre-trained model plus customization for specific use cases. However, it requires expertise in machine learning, access to relevant data for fine-tuning, and infrastructure to host your model endpoints.

Section Four: Open vs. Closed Models

When it comes to open versus closed foundation models, the key differences revolve around transparency, control, and cost. Open-source models generally offer more transparency and flexibility – you can examine, modify, and fine-tune the model as you please. However, they may require a more sophisticated skill set to utilize effectively.

On the other hand, closed models are typically proprietary, meaning the inner workings are not fully disclosed. They often come with customer support and might be better suited for business leaders who prefer an off-the-shelf solution. However, they can be more costly and offer less flexibility than their open-source counterparts.

Conclusion

Understanding the tech stack and associated landscape of generative AI is crucial for business leaders to have informed discussions. In general, we’re seeing less of a focus on increasing the number of parameters and more on fine-tuning models with proprietary data. I believe data will be the biggest differentiator as more websites change their terms of use not to allow web scraping for inclusion in the training of 3rd party models.

We didn’t even get into the business considerations of you are creating a sustainable competitive advantage with Gen AI, the cost implications of GenAI on your margins, and product-customer fit. Still, I will address those in a future blog post. There are more questions than answers, but it’s clear GenAI is more than hype, and everyone should be prepared for the long game.